One of the most exciting and groundbreaking areas of research in machine learning today is that of probabilistic programming languages, and attempting to unify general purpose programming with probabilistic modelling.

In this article, I will explain what is meant by probabilistic programming, how it is being used by companies like Google and Microsoft to push the frontiers of artificial intelligence, and why it will underpin all artificially-intelligent driven transformation and innovation across all sectors over the coming decade!

General Purpose Programming

Most general purpose programming languages commonly used today can be broken down into one or more of the following broad paradigms:

- Functional Programming Languages – declarative paradigm whereby the application state is processed via pure functions that avoid mutable data and shared states. Example programming languages that can be used for functional programming include Haskell, Python, Clojure and indeed Java (since Java 8)

- Procedural Programming Languages – imperative paradigm whereby a series of instructions to be executed is defined, grouped into modules consisting of procedures or subroutines. Example programming languages that can be used for procedural programming include C, Pascal, COBOL and Fortran.

- Object Oriented Programming Languages – object-based paradigm whereby data and procedures are co-located within an object, often referred to as an object’s attributes and methods respectively. Example programming languages that can be used for object oriented programming include Java, Python and C++.

Probabilistic programming languages (PPL) are a new breed of either entirely new languages, or extensions of existing general purposes languages, designed to combine inference through probabilistic models with general purpose representations.

Probabilistic Modelling and Inference

Let us start, as we always should, with first principles. Probabilistic models help us to draw population inferences – that is we create mathematical models to help us to understand or test a hypothesis about how a system or environment behaves. Probabilistic reasoning is a fundamental pillar of machine learning (ML), whereas deep learning (DL) can be distinguished from machine learning through its employment of gradient-based optimization algorithms. Probabilistic programming languages are designed to describe probabilistic models and then perform inference in those models.

Bayesian Inference

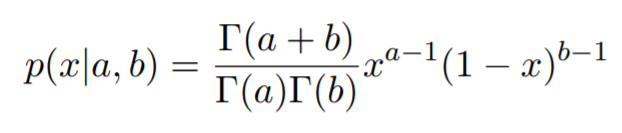

Consider the simple example of a biased coin toss – studied by all 1st year mathematics undergraduates around the world. Individual coin tosses are described by the Bernoulli distribution with parameter x, the latent variable or bias of the coin. We therefore describe the following probability distribution function in order to infer the latent variable given the results of the previously observed coin tosses:

Probability Density Function

Given y is the value/outcome of the flipped coin, we can say that y is an observed variable, and that the inference objective is described by p(x|y).

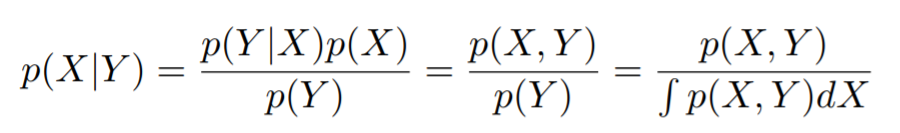

We can then use the Bayes theorem to compute this conditional probability given the probabilistic description of the coin model as a joint distribution, as follows:

Conditional Probability using the Bayes Theorem

Probabilistic programming languages allows us to automate this Bayesian inference!

Traditional Programming Approach

Developing a computer program for the biased coin toss using a general purpose programming language would take hundreds of lines of code and is a hard problem to solve manually, requiring the following calculations to be computed:

1.

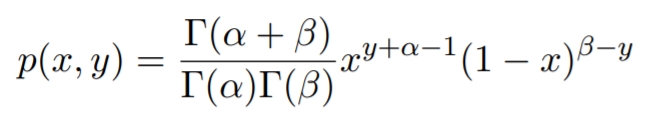

Given the latent variable x, and the observed outcome y, the value of the joint probability can be computed by:

2.

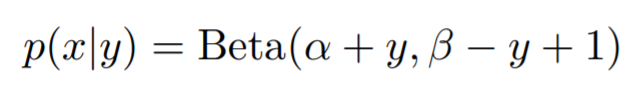

However, given than we want to compute the latent variable given previously observed variables (i.e. conditioning) rather than just the joint probability distribution, we can compute p(x|y), referred to as the posterior, as follows:

Where Beta refers to the Beta-binomial distribution i.e. continuous distribution on the interval (0, 1).

3.

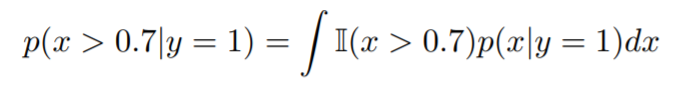

Using the above, we can calculate the latent variable i.e. the bias of the coin, given a previously observed ‘heads’ or ‘tails’. For example, to ask our computer program to compute the probability that the latent variable is greater than 0.7 given y = 1 (which refers to ‘heads’), our program would need to compute the following:

Probabilistic Programming Approach

As stated above, probabilistic programming languages and tools allow us to automate Bayesian inference. In other words, it allows us to code using probabilistic language-specific syntax to describe conditional probability distributions.

For example, to code the biased coin toss program using Edward (a Python library for probabilistic modeling, inference and criticism) would only take a few lines of code, as follows:

| import edward as ed import numpy as np import six import tensorflow as tf from edward.models import Bernoulli, Betaed.set_seed(42) x_data = np.array([0, 1, 0, 0, 0, 0, 0, 0, 0, 1])# Model p = Beta(1.0, 1.0) x = Bernoulli(probs=p, sample_shape=10)# Complete Conditional p_cond = ed.complete_conditional(p) |

For further details and examples, please refer to https://github.com/blei-lab/edward/tree/master/examples.

Probabilistic programming languages allow us to integrate statistics, machine learning and general purpose programming languages to not only describe probabilistic models and perform inference, but to also provide a toolchain through which we can rapidly advance machine learning by automating the complex but tedious calculations involved in supervised, unsupervised and semi-supervised inference as shown above.

Deep Learning and Probabilistic Programming

Recent advances in deep learning have been enabled via the development and adoption of technologies and frameworks that provide the ability to compute gradient-based optimizations more quickly and efficiently than ever before possible. By offloading these complex but tedious calculations to these software frameworks, researchers have been freed to explore and advance deep learning and artificial intelligence, with astonishing results.

Recent exciting and cutting-edge Edward developments have also included the consolidation of deep learning with Bayesian modelling to provide a single unified engine that is capable of performing deep probabilistic programming! is such a library, that consolidates Bayesian modelling, machine learning, deep learning and probabilistic programming, and is able to integrate with other deep learning frameworks such as TensorFlow and Keras.

The Future of Artificial Intelligence

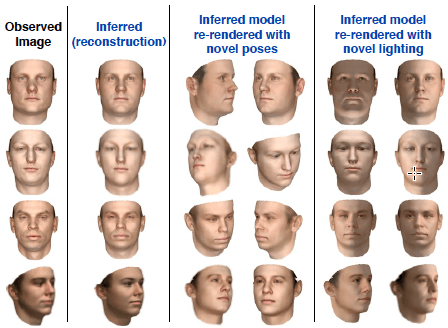

Deep probabilistic programming languages, integrated with distributed deep learning frameworks, will provide the basis for pushing the frontiers of artificial intelligence as we enter the next decade, and provide the development platform from which the next generation of innovative and intelligence-driven technologies will be engineered. The potential applications of deep probabilistic programming are as far and wide as artificial intelligence itself, limited only by our imaginations, with obvious use-cases in neuroscience, cyber security, astronomy, healthcare, computer graphics, image processing and chemistry. For example, probabilistic programming was used to generate 3D models of human faces based on 2D images using only 50 lines of code. To put that into context, a task this complex would have taken tens of thousands of lines of code using traditional general purpose programming languages!

Inverse Graphics – 3D faces rendered from 2D images using only 50 lines of PPL code. Reference: http://news.mit.edu/2015/better-probabilistic-programming-0413

Major organizations around the world are investing heavily in the research, development and utilization of deep probabilistic programming languages, including:

- Edward – developed, amongst others, by researchers at Google Brain

- NET – developed by Microsoft Research to run Bayesian inference in graphical models for .NET developers

- Pyro – deep universal probabilistic programming language developed by Uber AI Labs.

Further Reading

If you would like to find out more, please contact Jillur Quddus: jillur.quddus@methods.co.uk or alternatively check out the following resources for suggested further reading:

- Applications of Probabilistic Programming https://arxiv.org/ftp/arxiv/papers/1606/1606.00075.pdf

- Edward Tutorials http://edwardlib.org/tutorials/

- Probabilistic Programming on Wikipedia https://en.wikipedia.org/wiki/Probabilistic_programming_language

- Probabilistic Programming for Hackers http://camdavidsonpilon.github.io/Probabilistic-Programming-and-Bayesian-Methods-for-Hackers/